Member-only story

Big O notation : Understanding different time complexities

We already discussed what is Big O and how it helps in writing more clean codes by avoiding nested loops and improving efficiency.

But we haven’t explained Big O types explicitly and how to figure out how to find complexity for a algorithm. Here we will go through each complexity and how to achieve it .

Big O:

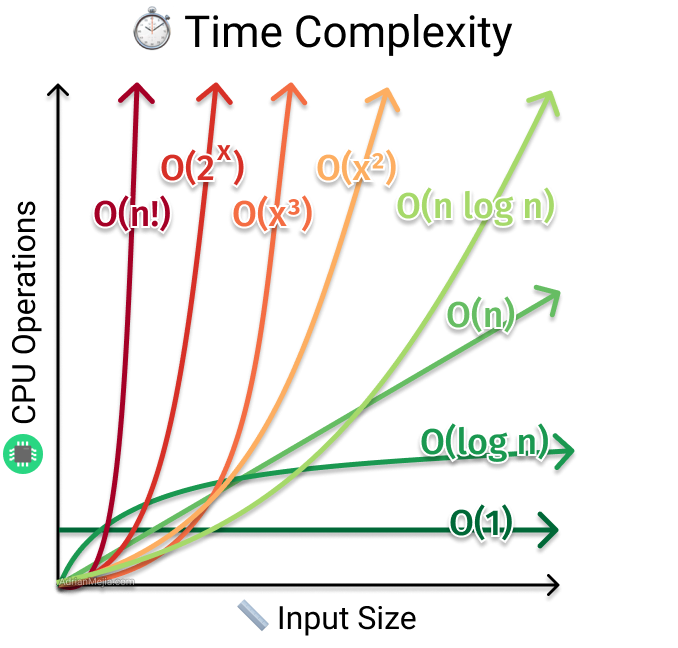

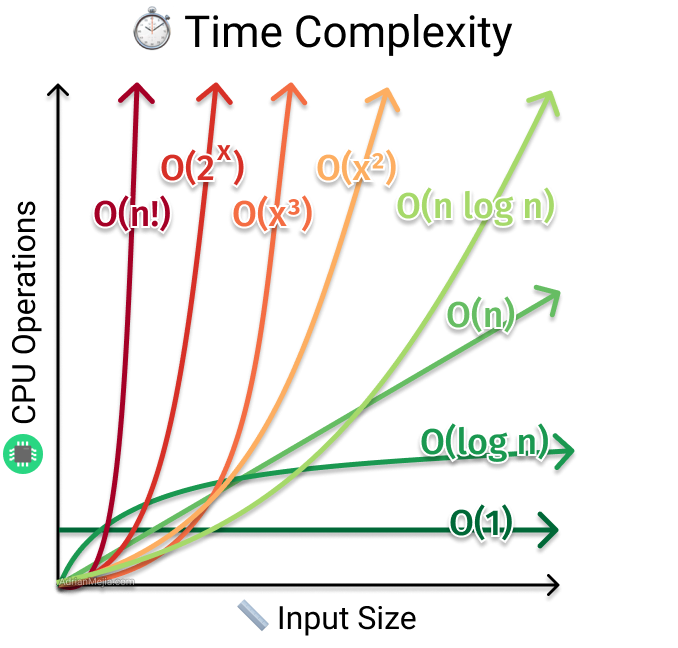

The above image shows different time complexities for Big O. it shows how many times an operation need to be repeated before we get the result for worst case situation.

in the previous article we discussed that there are other complexities also for best case , average and then worst. Big O represents worst case only, as we are most concerned about how system handles worst case conditions.

Eg: for looking for an item in an array

Best case : Ω(1) ( Big Omega )the element is in index 1 so we just need to check the first element , we don’t have to loop through entire array

Average Case: we just have to loop until half of the element to find the index , Θ(log n) (Big Theta)

Worst case: we have to loop through all the array elements if element is near to end Big O(n)

Now lets understand each complexities (Best to worst):

O(1):

Big O(1) means constant or operations that need just one time execution ; eg

let a = 2 // O(1)

let a = b + 2 // O(1)O(logn):

before understanding log n , we need to understand what is log

log shows to what power a base number be raised to get the target number

so for example:

log¹⁰(1000) will be 3 . as 10³ = 1000